September 8, 2025

Updated: September 8, 2025

The latest deepfake numbers on AI-driven fraud, voice cloning, IDV bypass, and what actually works to defend.

Mohammed Khalil

In the time it takes to read this paragraph, another deepfake attack has likely been attempted. In 2024, they occurred at a rate of one every five minutes. This isn't hyperbole; it's the new reality of digital trust. Deepfake technology, powered by generative AI, has officially crossed the chasm from a niche curiosity into a mainstream tool for sophisticated crime.

The numbers are no longer theoretical; they represent billions in actual and projected losses, with generative AI fraud in the U.S. alone expected to hit $40 billion by 2027, according to the Deloitte Center for Financial Services. This is a key driver behind overall cybercrime trends and costs.

This article isn't just a collection of numbers. It's a threat briefing for 2025. We'll dissect the data to reveal how these attacks work, why our natural defenses are failing, and what actionable steps businesses must take to build resilience.

Understanding these deepfake statistics is the first step in defending against a threat that targets the very nature of identity and trust.

A deepfake is a piece of synthetic media an image, video, or audio clip where a person’s likeness or voice has been replaced or altered using artificial intelligence. Think of it as a digital puppet, but one that can look and sound indistinguishable from a real person.

The technology behind it is primarily powered by a machine learning architecture called Generative Adversarial Networks (GANs). In simple terms, two AIs are pitted against each other: a "generator" that creates the fakes and a "discriminator" that tries to spot them.

They train each other relentlessly until the fakes become so convincing they can fool both the AI and human eyes. Newer, more powerful methods like Diffusion Models and Transformer Models are making them even more realistic and harder to detect.

The core of this technology is its ability to exploit vulnerabilities in how we perceive reality, much like how attackers exploit common network vulnerabilities to breach a technical perimeter.

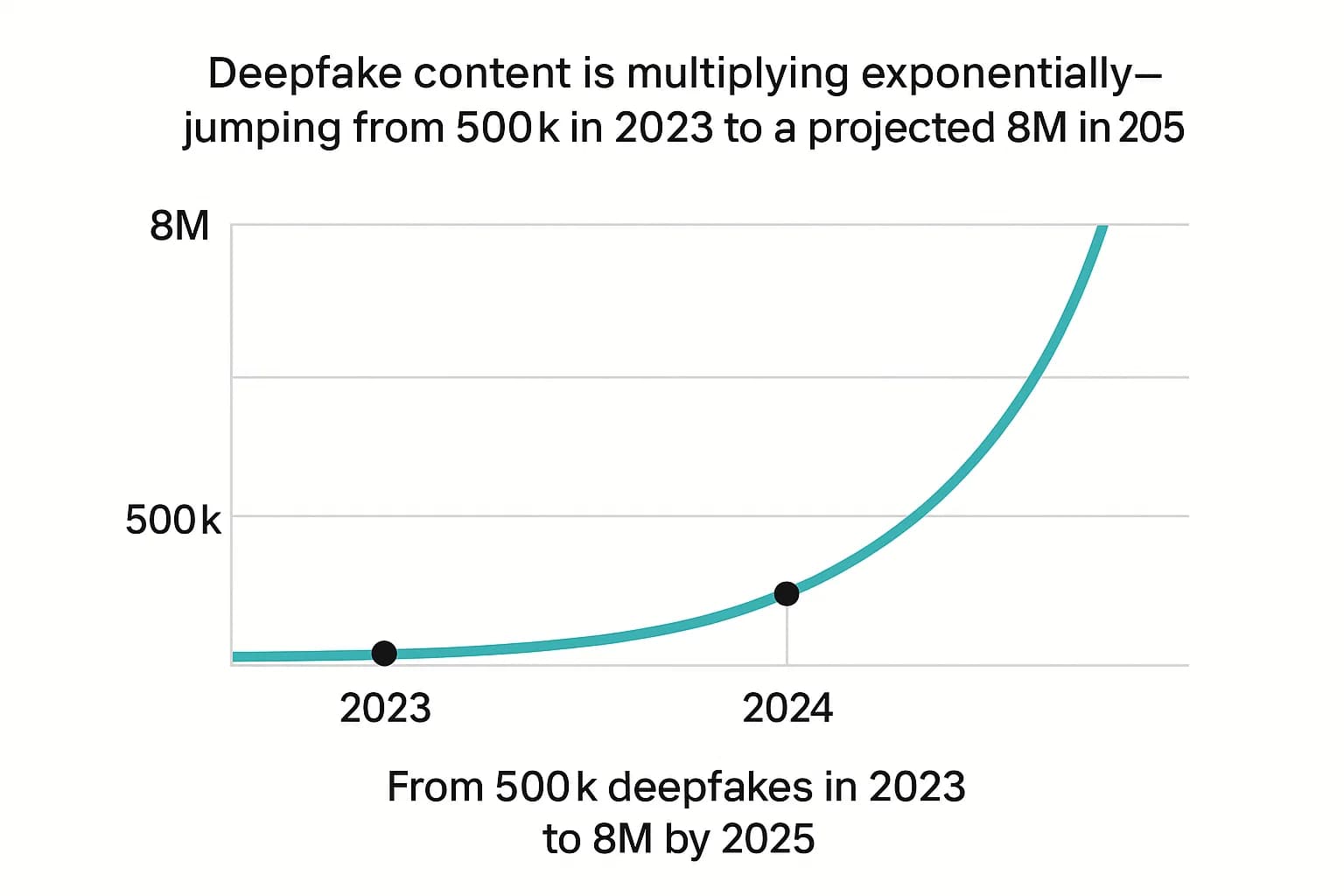

They’re doubling every few months, with an estimated 8 million files projected to be shared in 2025. The data paints a clear picture of a threat that is not just growing, but accelerating at an exponential rate. The sheer volume of synthetic media combined with its weaponization for financial gain has created a perfect storm for businesses and individuals alike.

The volume of deepfake content shared online is exploding. After an estimated 500,000 deepfakes were shared across social media platforms in 2023, that number is projected to skyrocket to 8 million by 2025.

This is consistent with a growth rate where the volume of deepfake videos increases by 900% annually. This isn't linear growth; it's a viral proliferation that outpaces nearly every other cyber threat.

This surge is reflected in detection metrics across all industries. In 2023 alone, the number of detected deepfake incidents saw a 10x increase compared to the previous year, signaling a rapid and widespread operationalization of the technology by malicious actors.

While deepfakes are used for various purposes, the primary malicious use case driving investment and innovation by criminals is fraud. Identity fraud attempts using deepfakes surged by an incredible 3,000% in 2023.

The financial impact of these attacks is staggering. In 2024, businesses lost an average of nearly $500,000 per deepfake related incident. For large enterprises, the cost was even higher, with some losses reaching up to $680,000. Looking ahead, the trend is grim. see the Soc 2 Penetration Testing Guide 2025 for deeper context on how identity integrity is assessed.

A forecast from the Deloitte Center for Financial Services projects that fraud losses in the U.S. facilitated by generative AI will climb from $12.3 billion in 2023 to $40 billion by 2027, a compound annual growth rate of 32%.

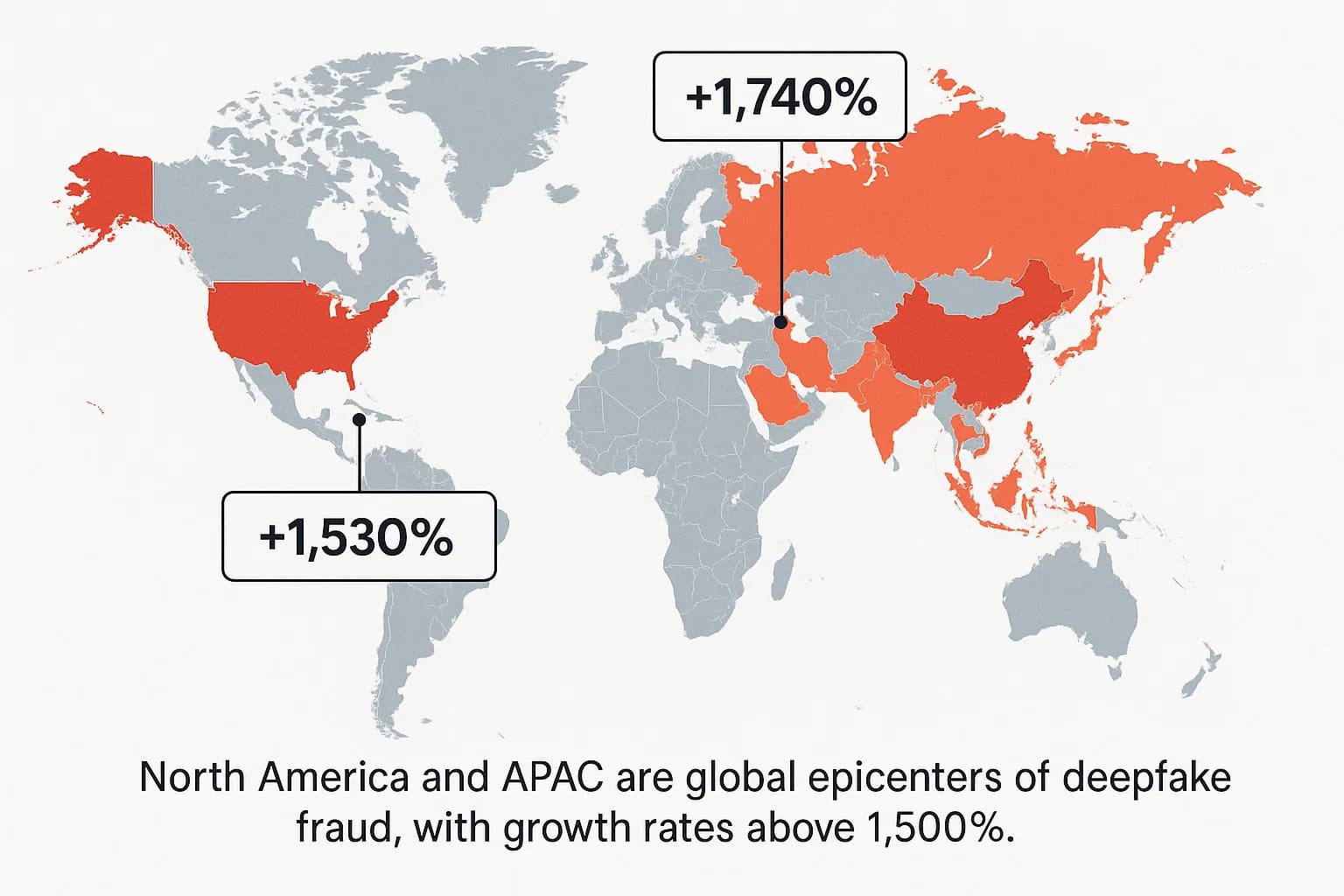

This threat is not uniformly distributed. Attackers are focusing their efforts on high value digital economies where online financial services are prevalent.

This rapid escalation mirrors the increasing sophistication of penetration testing markets across regions, especially in Central and Eastern Europe, which has been highlighted extensively in Penetration Testing Companies Czech Republic 2025 as organizations strengthen their identity verification and security testing controls.

The rapid growth of malicious deepfake creation is dangerously outpacing our ability to defend against it. While the market for AI detection tools is growing at a healthy compound annual rate of around 28-42% , the threat itself is expanding at rates of 900% or even 1,740% in key regions.

This creates a massive "vulnerability gap." Worse, the effectiveness of these defensive tools plummets by 45-50% when they are taken out of controlled lab conditions and used against real world deepfakes.

This widening gap means we are fundamentally losing the technological arms race. Any security strategy that relies solely on buying the latest detection tool is flawed and destined to fail.

The strategic focus must shift from pure technology to procedural resilience building robust verification processes that can withstand deception even if a deepfake is technically perfect.

This surge in sophisticated attacks is driving enterprises to seek out Top Rated Penetration Testing Companies US 2025 to audit their defenses and close critical security gaps.

To understand the risk, it's crucial to move beyond abstract numbers and look at the concrete attack vectors being deployed in the wild. From multi million dollar corporate heists to widespread consumer scams, deepfakes are the primary enabler.

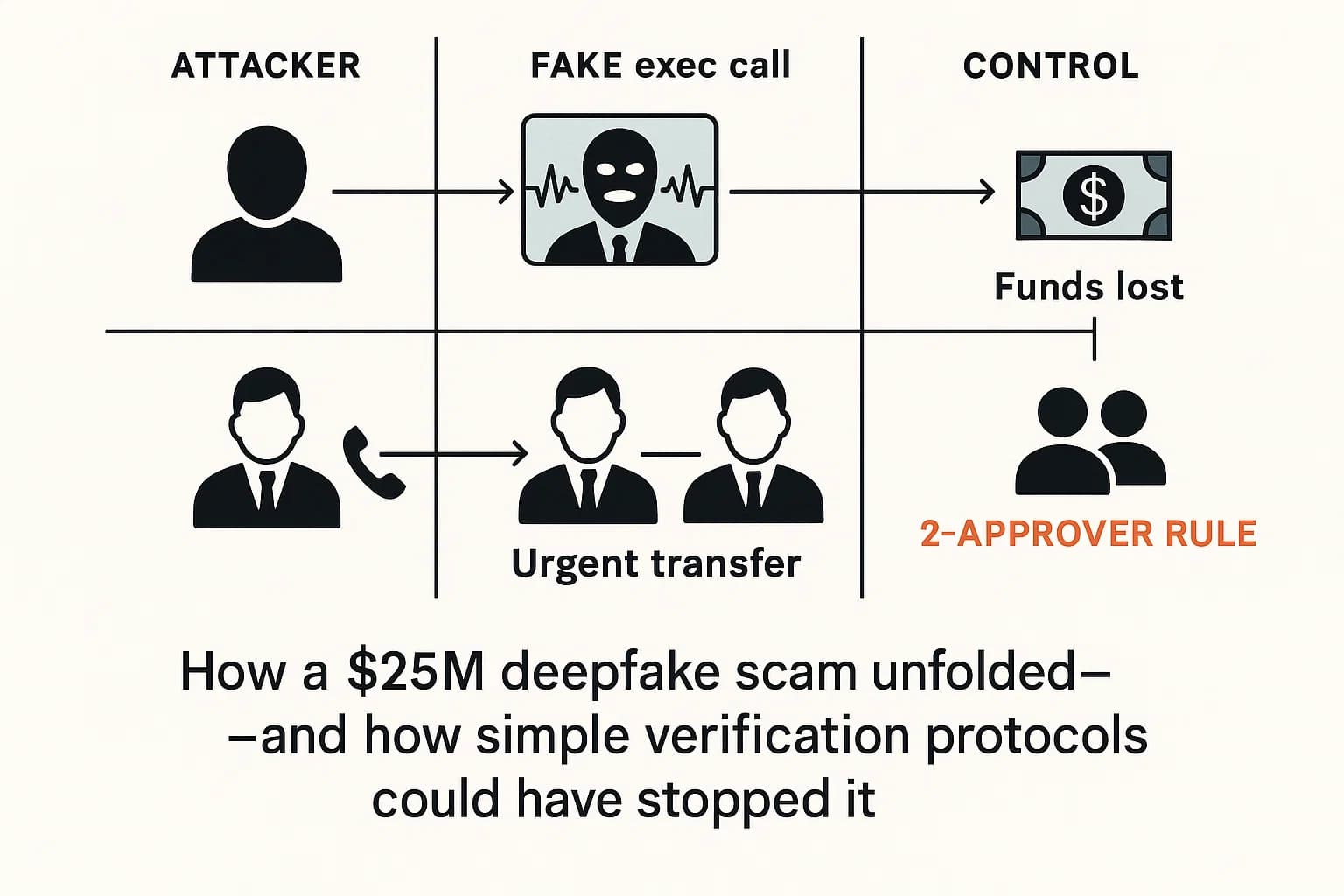

The most visceral example of a high stakes deepfake attack is the February 2024 incident where a finance worker at the global engineering firm Arup was tricked into wiring $25 million to accounts controlled by fraudsters.

This was not a simple phishing attack. The attack involved a sophisticated, multi person video conference call featuring deepfaked, AI generated likenesses of the company's chief financial officer and other senior executives.

This case proves that complex, multimodal attacks are no longer theoretical; they are happening now, with catastrophic results, and serve as a powerful Real world account takeover case study.

This attack was an evolution of earlier methods. In 2019, a UK energy firm was defrauded of €220,000 via a deepfaked voice clone of its CEO, demonstrating that audio was the initial entry point for this type of fraud.

With the increasing sophistication of video deepfakes, these Business Email Compromise (BEC) and impersonation attacks have become far more convincing and dangerous. It's a persistent, high volume threat, with CEO fraud now targeting at least 400 companies per day.

Voice cloning, or audio deepfaking, has become the most democratized and widespread form of deepfake attack. A 2024 McAfee study found that 1 in 4 adults have experienced an AI voice scam, with 1 in 10 having been personally targeted by one.

This trend aligns closely with the broader escalation of deception tactics documented in Social Engineering Statistics 2025 which highlights how attackers increasingly exploit trust, urgency, and emotional triggers to manipulate victims at scale.

The barrier to entry for this type of attack has completely collapsed.

Financial institutions, cryptocurrency exchanges, and fintech platforms all rely on remote Identity Verification (IDV) and Know Your Customer (KYC) processes to onboard new users. These digital front doors are now a primary target for deepfake attacks.

Fraudsters use "face swap" deepfakes and virtual cameras to fool the liveness detection checks that are supposed to ensure a real person is present. These attacks, which can bypass biometric authentication, increased by a staggering 704% in 2023.

The cryptocurrency sector has become ground zero for this type of fraud, accounting for an incredible 88% of all detected deepfake fraud cases in 2023. It is followed closely by the broader fintech industry, which saw a 700% increase in deepfake incidents in the same year. For organizations in these sectors, adhering to strict compliance frameworks is essential; consulting a PCI DSS Penetration Testing 2025 Guide can provide the necessary roadmap to secure payment data against these evolving threats.

The problem has become so severe that the research and advisory firm Gartner predicts that by 2026, 30% of enterprises will no longer consider standalone IDV and authentication solutions to be reliable in isolation. This signals an urgent, industry wide need to shift toward more robust, multi layered verification strategies, which is a major factor in rising data breach statistics and trends.

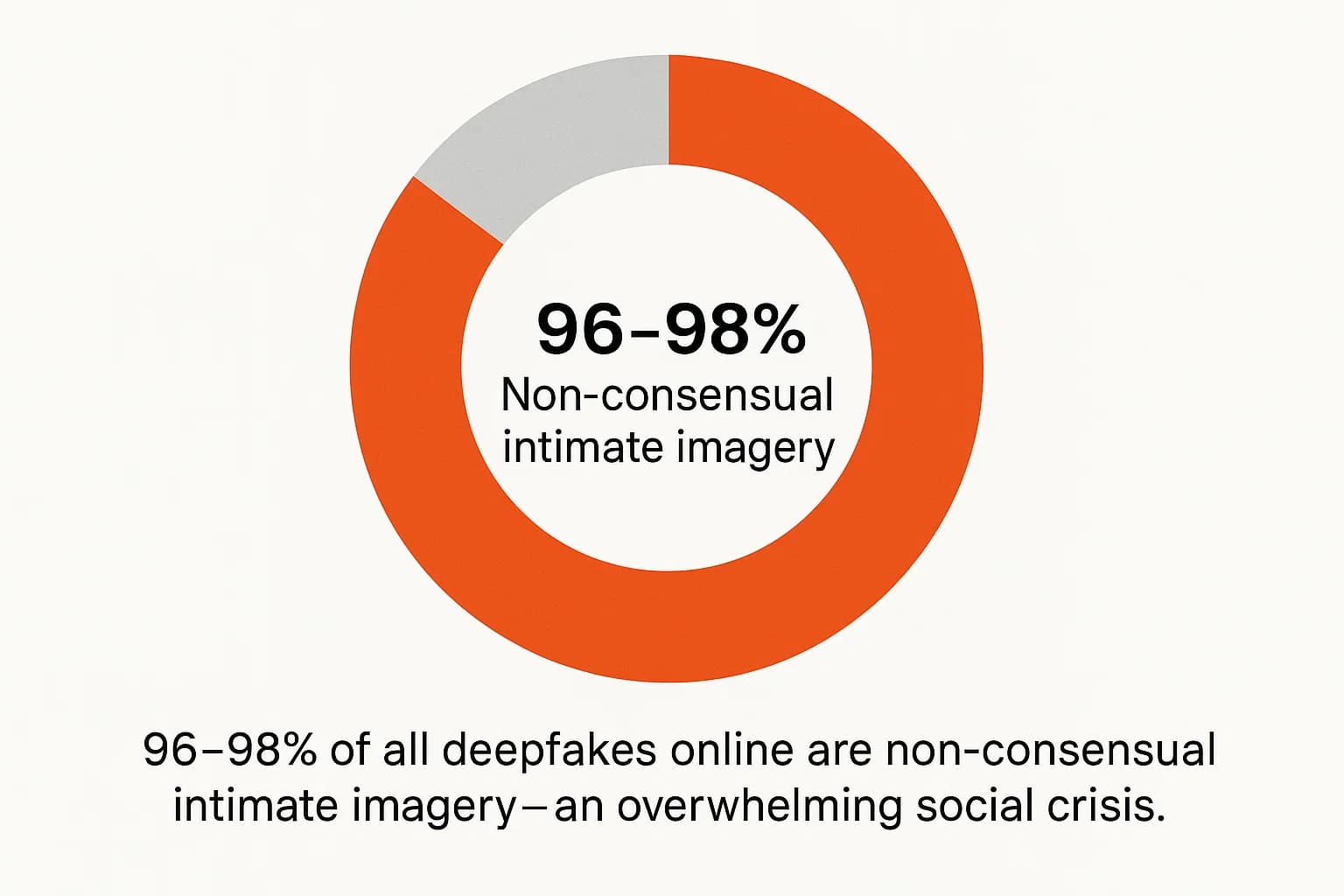

While financial fraud has the largest economic impact, the vast majority of deepfake content by sheer volume is Non Consensual Intimate Imagery (NCII), often referred to as "deepfake pornography" or "revenge porn." Estimates consistently show that 96 98% of all deepfake videos online fall into this category.

This is a form of digital, gender based violence that overwhelmingly targets women. Multiple studies have found that 99 100% of the victims in deepfake pornography are female.

The widespread nature of this abuse has spurred urgent legislative action globally, including the TAKE IT DOWN Act in the U.S. and new criminal offenses under the UK's Online Safety Act, which aim to provide victims with legal recourse and compel platforms to act.

Deepfake Attack Vectors at a Glance

The success of deepfake attacks is not just a story about sophisticated technology. It is equally a story about human psychology and organizational failure.

Technology exploits our cognitive biases, and our lack of preparedness turns a potential threat into a realized disaster. This phenomenon is clearly reflected in recent Social Engineering Statistics 2025, which highlight how manipulation tactics are evolving to leverage high-fidelity AI impersonations.

There is a profound and dangerous disconnect between how well people think they can spot a deepfake and their actual ability to do so.

This vulnerability is mirrored at the organizational level, where a lack of awareness has led to a systemic lack of preparedness. These gaps resemble findings seen in global penetration testing markets, including the U.S., where the need for stronger verification controls continues to rise. See Top Rated Penetration Testing Companies US 2025

for a market comparison of vendors addressing these weaknesses.

The data reveals a critical strategic error in how organizations approach this threat. The common impulse is to focus on "awareness" training employees to "spot the fake." However, the statistics on human detection accuracy prove this is a fundamentally flawed premise.

Humans are not, and will not become, reliable forensic analysts, especially when under the pressure of a sophisticated social engineering attack.

The $25 million Arup fraud was not a failure of an employee's detection skills; it was a failure of organizational process. A simple, mandatory, and non negotiable protocol for example, "All fund transfers over $10,000 requested via video or email must have voice confirmation via a trusted, pre registered phone number" would have stopped the attack cold, regardless of how perfect the deepfake was. This highlights the need to shift focus from awareness to adherence.

This highlights the need to shift focus from awareness to adherence. Stop trying to make every employee a detection expert. Instead, design resilient verification procedures and train employees to adhere to them without exception. This is the crucial difference between a vulnerability assessment vs penetration testing; one looks for theoretical flaws, while the other tests whether real-world procedures and controls such as those outlined in our SOC 2 Penetration Testing Guide 2025 hold up under a simulated attack.

Models overfit to lab datasets; accuracy can drop by ~50% on new manipulations. This is an asymmetric arms race where the defense is constantly playing catch up.

To combat the surge in identity fraud, two key technologies have emerged as the front line of defense.

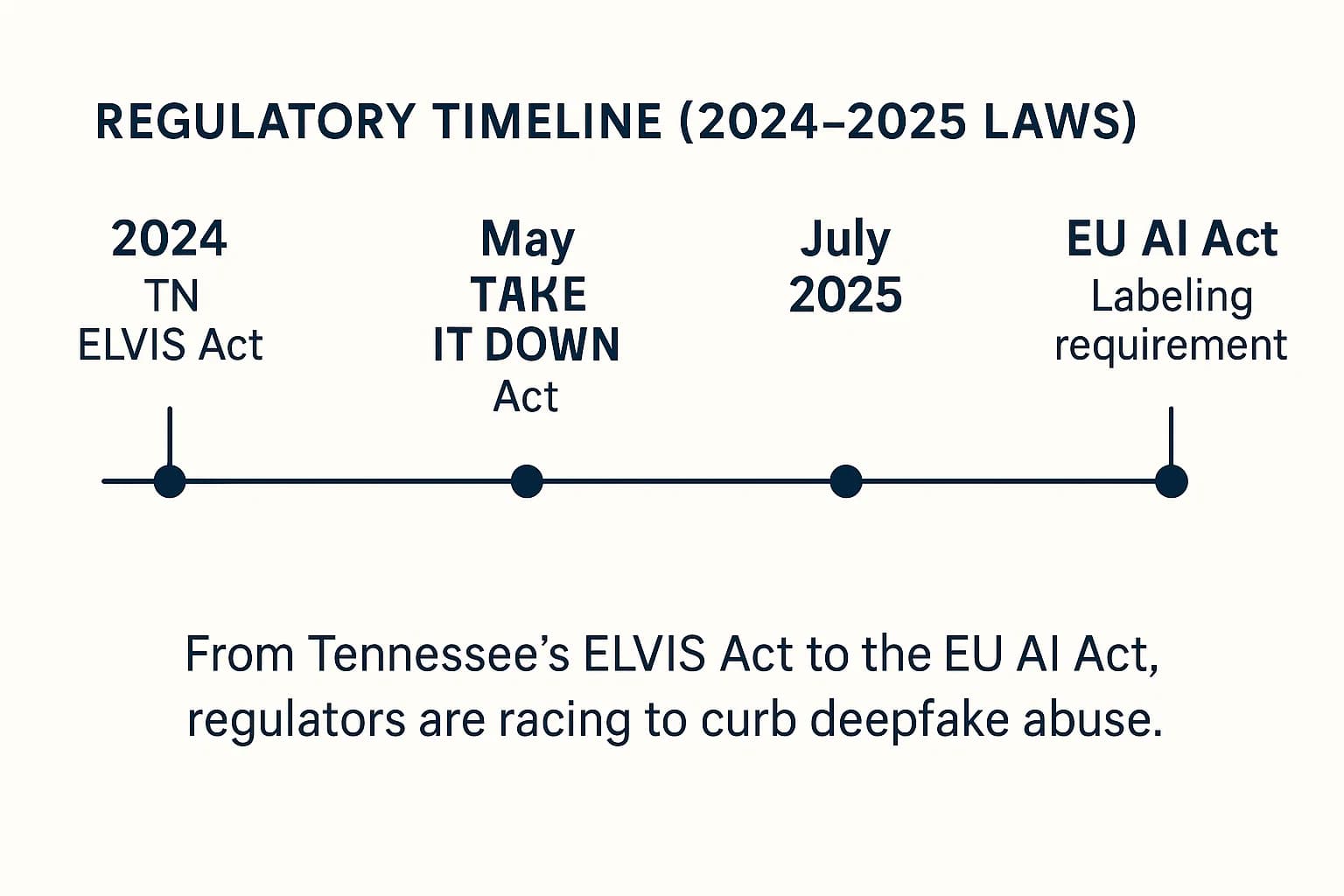

Governments around the world are scrambling to create legal frameworks to address the harms caused by deepfakes. This has resulted in a fragmented but important set of new rules.

The average cost of a deepfake attack for a business in 2024 was nearly $500,000 per incident. However, high stakes attacks can be far more damaging, as seen in the Arup case, which resulted in a loss of $25 million from a single, sophisticated deepfake video conference.

Humans are extremely inaccurate, correctly identifying high quality deepfake videos only about 24.5% of the time. While AI detection tools perform better in lab settings, their accuracy can drop by up to 50% when confronted with new, real world deepfakes, according to a 2024 study. Neither approach is a foolproof solution on its own.

The cryptocurrency sector is by far the most targeted industry, accounting for 88% of all detected deepfake fraud cases in 2023. This is due to its digital native operations, high value and often irreversible transactions, and heavy reliance on remote identity verification processes that are vulnerable to spoofing. The financial services sector follows as the next most targeted industry.

Fraudsters bypass IDV/KYC checks by using sophisticated digital presentation attacks. They use "face swap" deepfakes or inject pre recorded or real time manipulated video streams via virtual cameras. These methods are designed to fool liveness detection effectiveness statistics by mimicking the subtle movements, expressions, and even skin textures that systems look for to confirm a user is physically present.

As of 2025, a new patchwork of laws applies. The EU AI Act mandates clear labeling for all deepfakes starting August 2, 2025. The U.S. TAKE IT DOWN Act criminalizes non consensual intimate deepfakes and requires a 48 hour takedown by platforms. The UK Online Safety Act makes platforms legally responsible for removing illegal content, including deepfake pornography. And Tennessee's ELVIS Act protects against the unauthorized commercial use of AI cloned voices.

Before any payment change or urgent transfer request, especially one that seems unusual or high pressure, finance teams should follow a strict verification protocol. The OWASP Guide to Preparing and Responding to Deepfake Events strongly advocates for such procedural defenses.

The data is unequivocal. Deepfakes are no longer an edge case or a future threat; they are a primary vector for large scale financial fraud, sophisticated social engineering, and widespread social harm. The statistics for 2025 reveal a threat that is growing exponentially, a corporate world that is dangerously unprepared, and a human population that is psychologically ill equipped to serve as a reliable line of defense.

Perhaps the greatest danger revealed by these statistics is not that we will be fooled by a single fake video or audio clip, but that our collective trust in all digital media will continue to erode. This phenomenon, known as the "liar's dividend," allows malicious actors to dismiss authentic evidence as a fabrication, undermining the very foundation of shared reality. The only sustainable path forward is a defense in depth strategy that combines advanced (but imperfect) technology like liveness detection with robust, non-negotiable procedural controls. The guiding principle for the era of synthetic media must be: verify, don't just trust.

The threats of 2025 demand more than just awareness; they require readiness. If you're looking to validate your security posture against sophisticated AI cybersecurity threats, identify hidden risks in your verification processes, or build a resilient defense strategy, DeepStrike is here to help. Our team of practitioners provides clear, actionable guidance to protect your business.

Explore our penetration testing services for businesses to see how we can uncover vulnerabilities before attackers do. Drop us a line, we’re always ready to dive in.

Mohammed Khalil is a Cybersecurity Architect at DeepStrike, specializing in advanced penetration testing and offensive security operations. With certifications including CISSP, OSCP, and OSWE, he has led numerous red team engagements for Fortune 500 companies, focusing on cloud security, application vulnerabilities, and adversary emulation. His work involves dissecting complex attack chains and developing resilient defense strategies for clients in the finance, healthcare, and technology sectors.

Stay secure with DeepStrike penetration testing services. Reach out for a quote or customized technical proposal today

Contact Us