September 4, 2025

From encryption and IAM to DLP and incident response how to build a resilient, data-centric defense in 2025.

Mohammed Khalil

In today's digital economy, data isn't just a part of your business; it is your business. It drives innovation, shapes customer experiences, and creates competitive advantages. Protecting this asset is no longer a back office IT task, it's a board level strategic imperative. Data security services are the comprehensive set of technologies, processes, and policies designed to protect digital information from unauthorized access, corruption, or theft throughout its entire lifecycle. This isn't just about cybersecurity; it's about business survival.

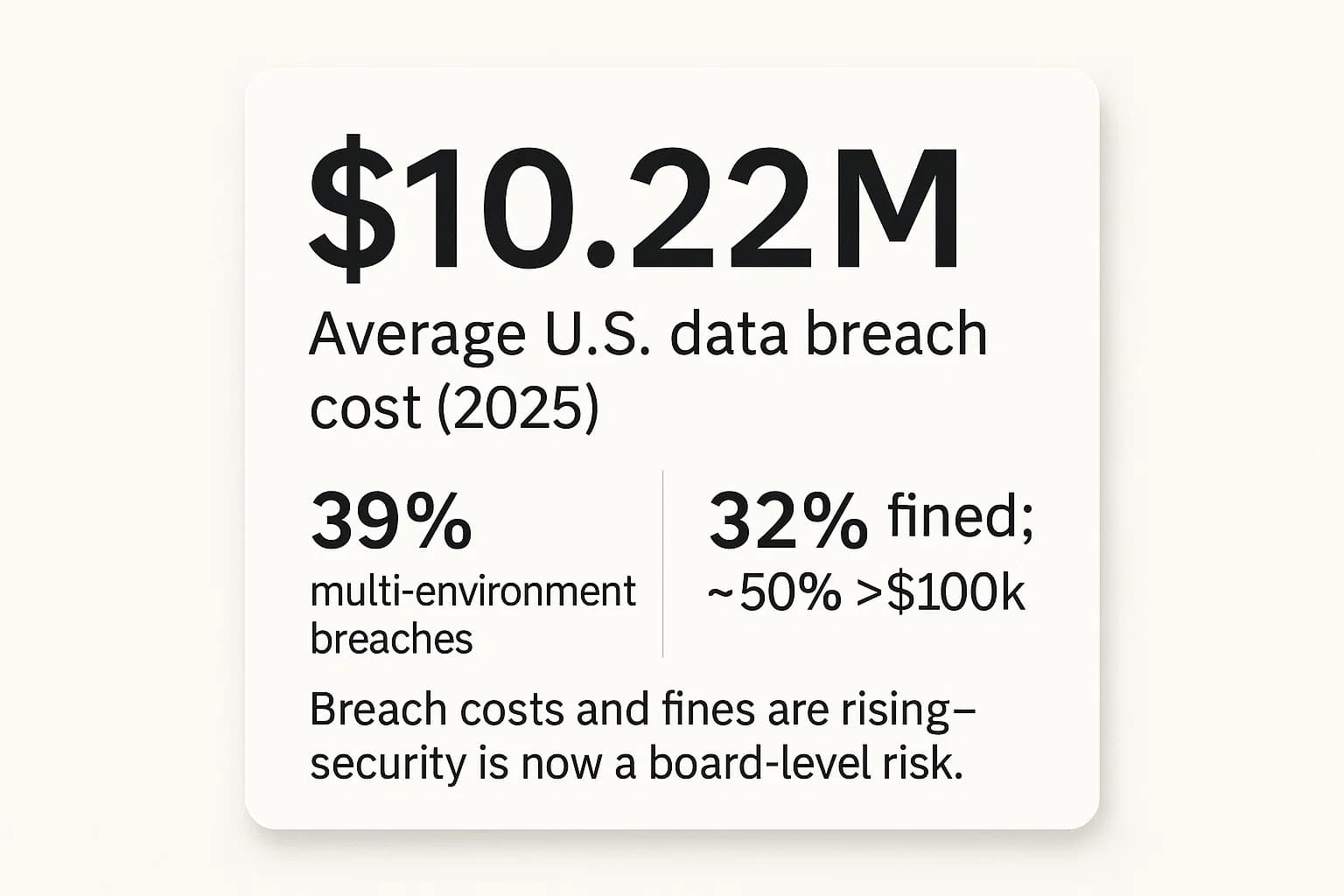

The stakes have never been higher. The IBM Cost of a Data Breach Report 2025 reveals a sobering reality: the average cost of a data breach for U.S. organizations has surged to a record $10.22 million. This number isn't just an abstract statistic; it represents a potentially catastrophic event that can cripple operations, erode customer trust, and trigger crippling regulatory fines.

This dramatic rise in costs is a direct symptom of two powerful forces. First, the increasing complexity of modern IT environments, where 39% of breaches span multiple environments like public and private clouds, driving up costs. Second, the growing enforcement power of regulators. The same IBM report notes that 32% of breached organizations paid a regulatory fine, with nearly half of those fines exceeding $100,000. This signals a critical shift: the financial pain of a breach is no longer just about recovery and lost business; it's increasingly about punitive measures from authorities. Investing in data security is now as much a legal and financial risk management strategy as it is a technical one.

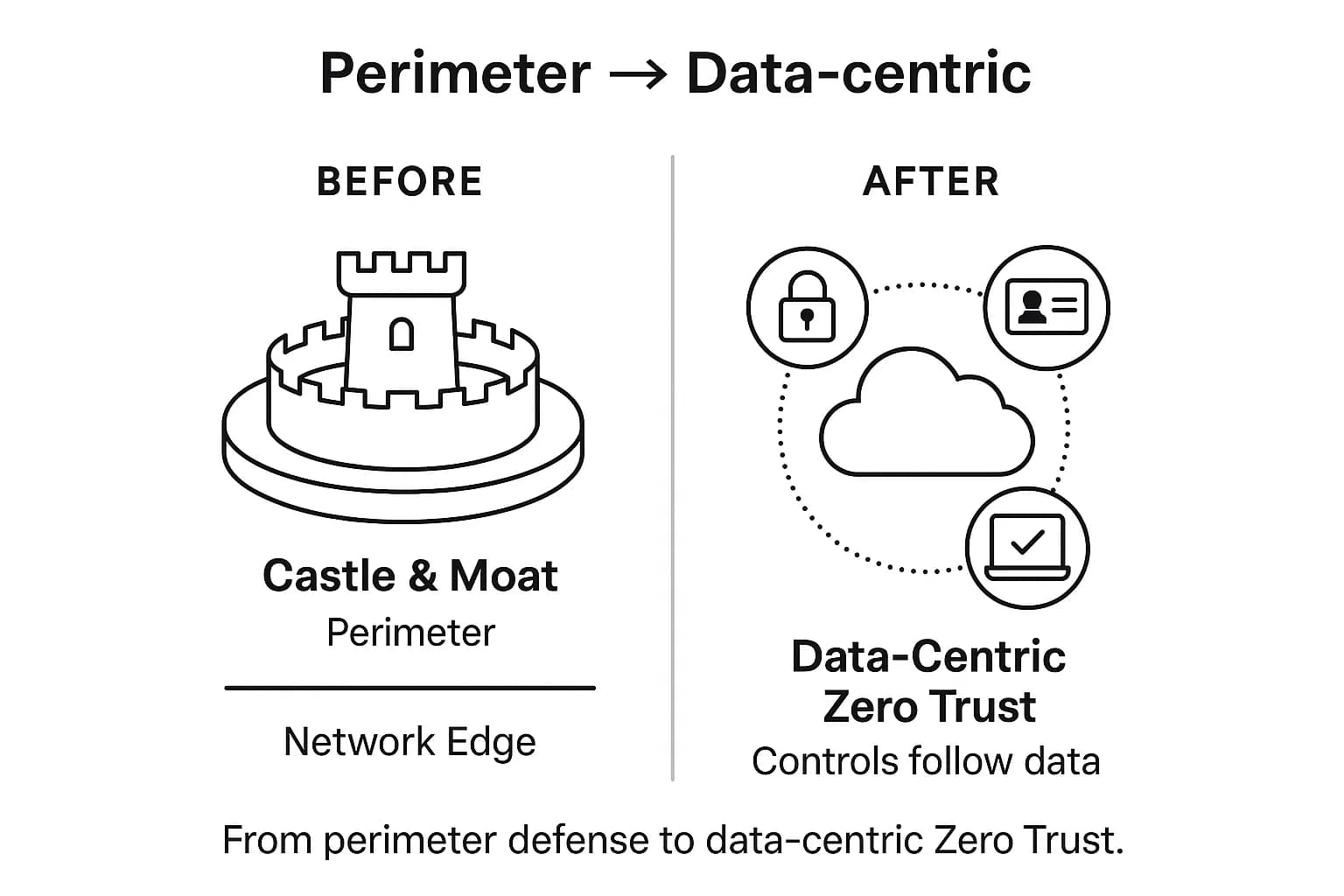

This new reality demands a radical rethinking of security. The traditional "castle and moat" model, focused on defending a network perimeter, is obsolete. With the rise of cloud services, a distributed workforce, and sprawling data landscapes, the perimeter has dissolved. The only viable strategy for 2025 and beyond is a data centric approach protecting the "crown jewels" (the data) directly, no matter where they are created, stored, or used. This guide provides a practitioner's roadmap to building that modern, resilient data security posture.

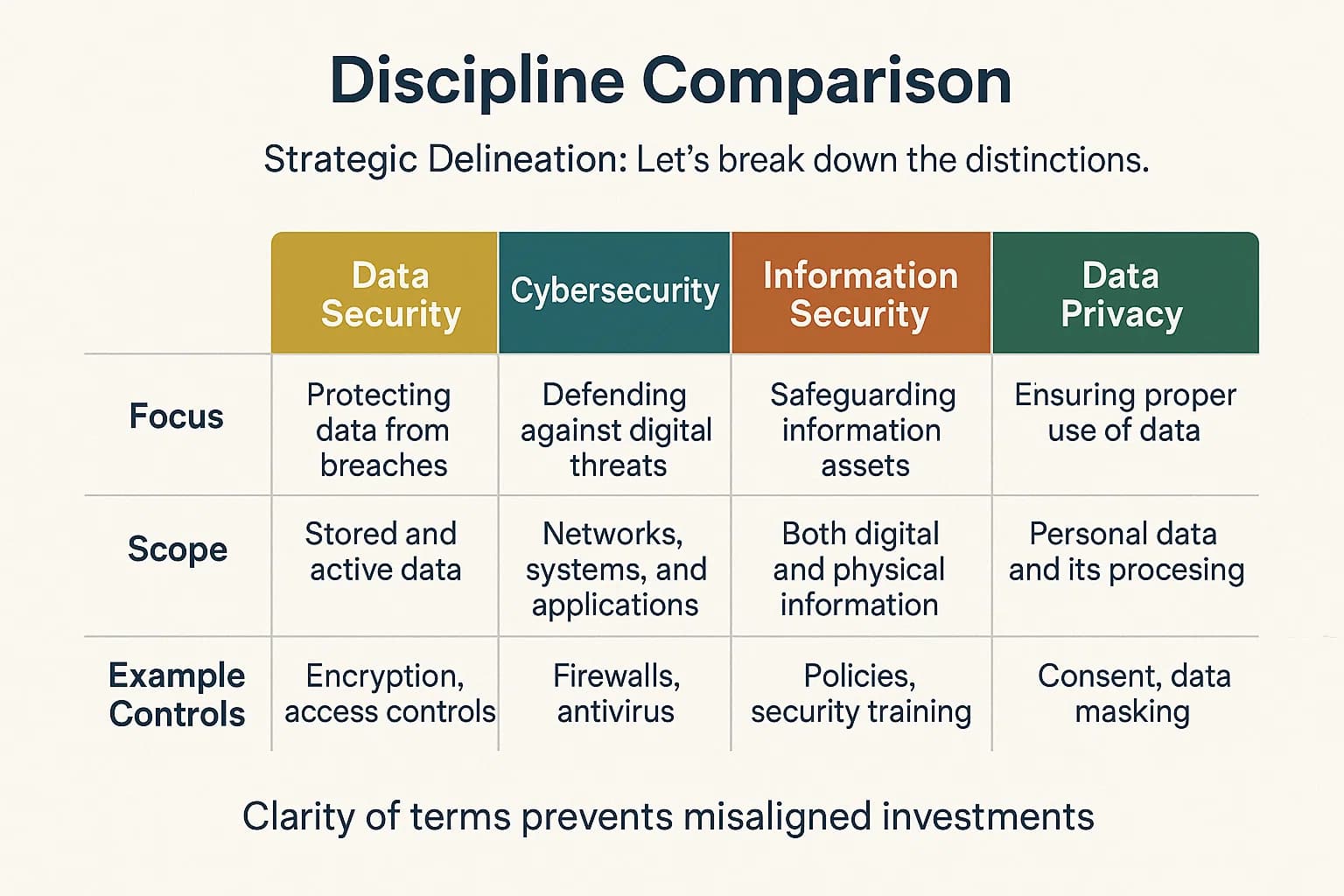

To build an effective strategy, you first need a clear, shared vocabulary. The world of information protection is filled with terms that are often used interchangeably, leading to strategic confusion and misaligned efforts. Let's establish the foundational principles and clarify the terminology.

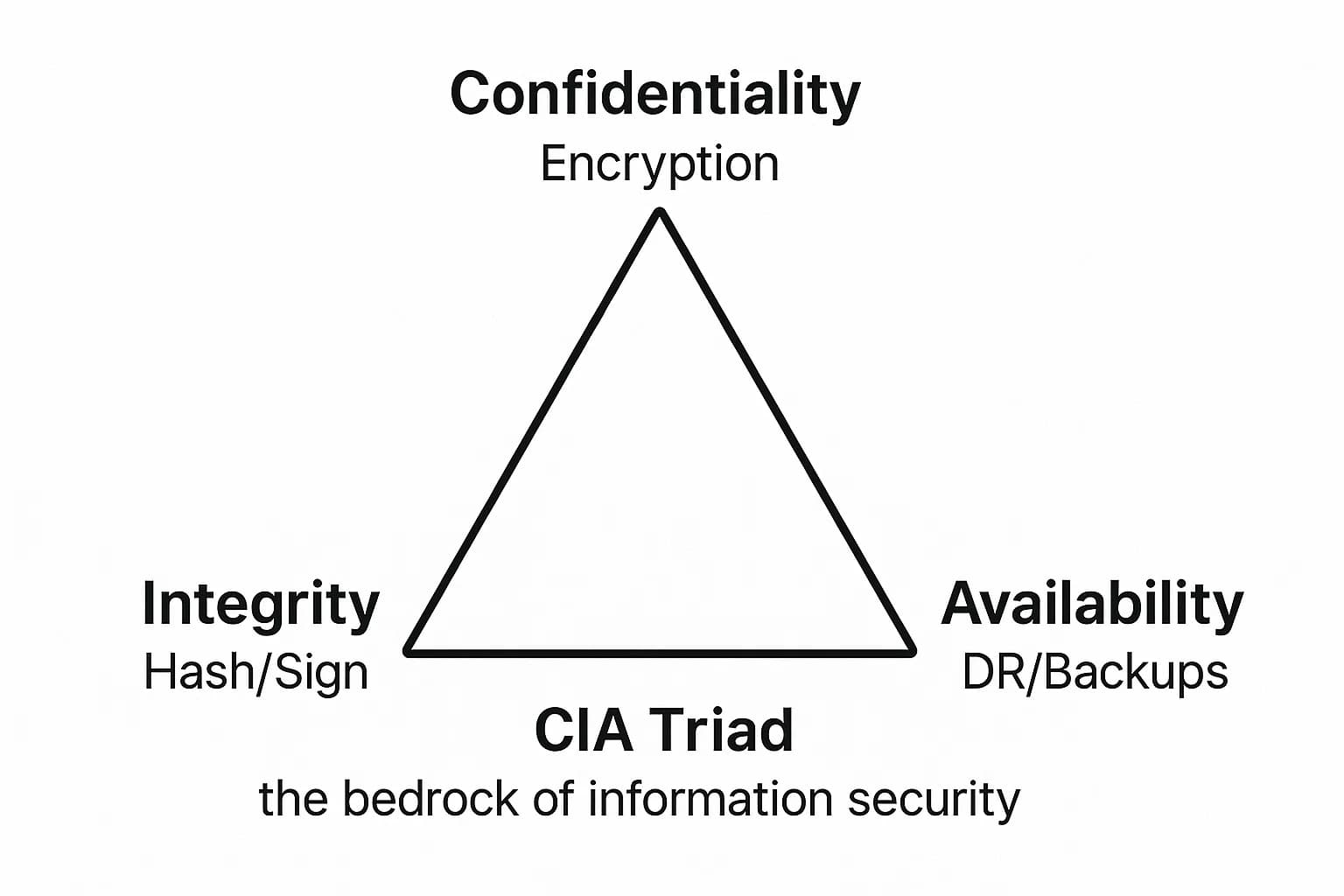

The entire discipline of information security is built on three foundational principles, collectively known as the CIA Triad. The National Institute of Standards and Technology (NIST) defines data security as the process of maintaining these three attributes.

Understanding the precise differences between key terms is not just academic; it has profound strategic implications. The language an organization uses internally is often a powerful indicator of its security maturity and focus. An organization that defaults to "cybersecurity" likely still operates on a legacy, perimeter focused model, thinking in terms of firewalls and network defenses. In contrast, an organization that has deliberately adopted the language of "data security" has likely made the strategic shift to a modern, data centric, Zero Trust architecture, focusing on controls that are attached to the data itself.

This reflects a fundamental philosophical difference. The first is a "castle and moat" approach. The second is a "protect the crown jewels directly" approach, which is essential in a world where the moat the network perimeter has all but vanished.

Let's break down the distinctions.

The following provides a clear reference for these disciplines.

Security Discipline Comparison

A robust data security strategy is built on a foundation of core technologies designed to render data unusable to unauthorized parties, protect it as it moves, and reduce its value as a target. Each serves a distinct purpose and is applied based on the specific use case and compliance requirements.

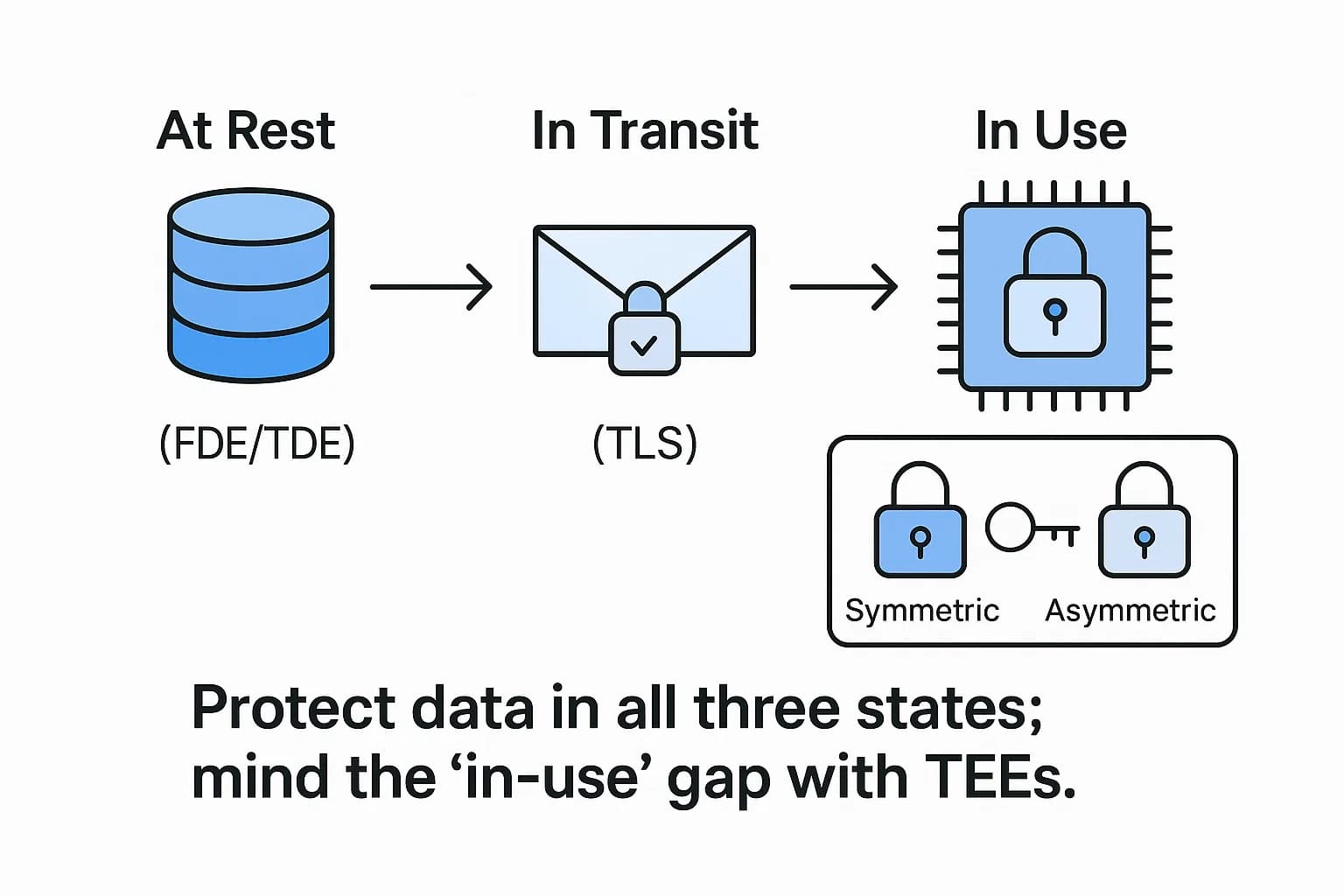

Encryption is the process of using a cryptographic algorithm to transform readable data (plaintext) into an unreadable format (ciphertext). It's the ultimate safeguard; even if data is stolen, it remains meaningless without the correct decryption key.

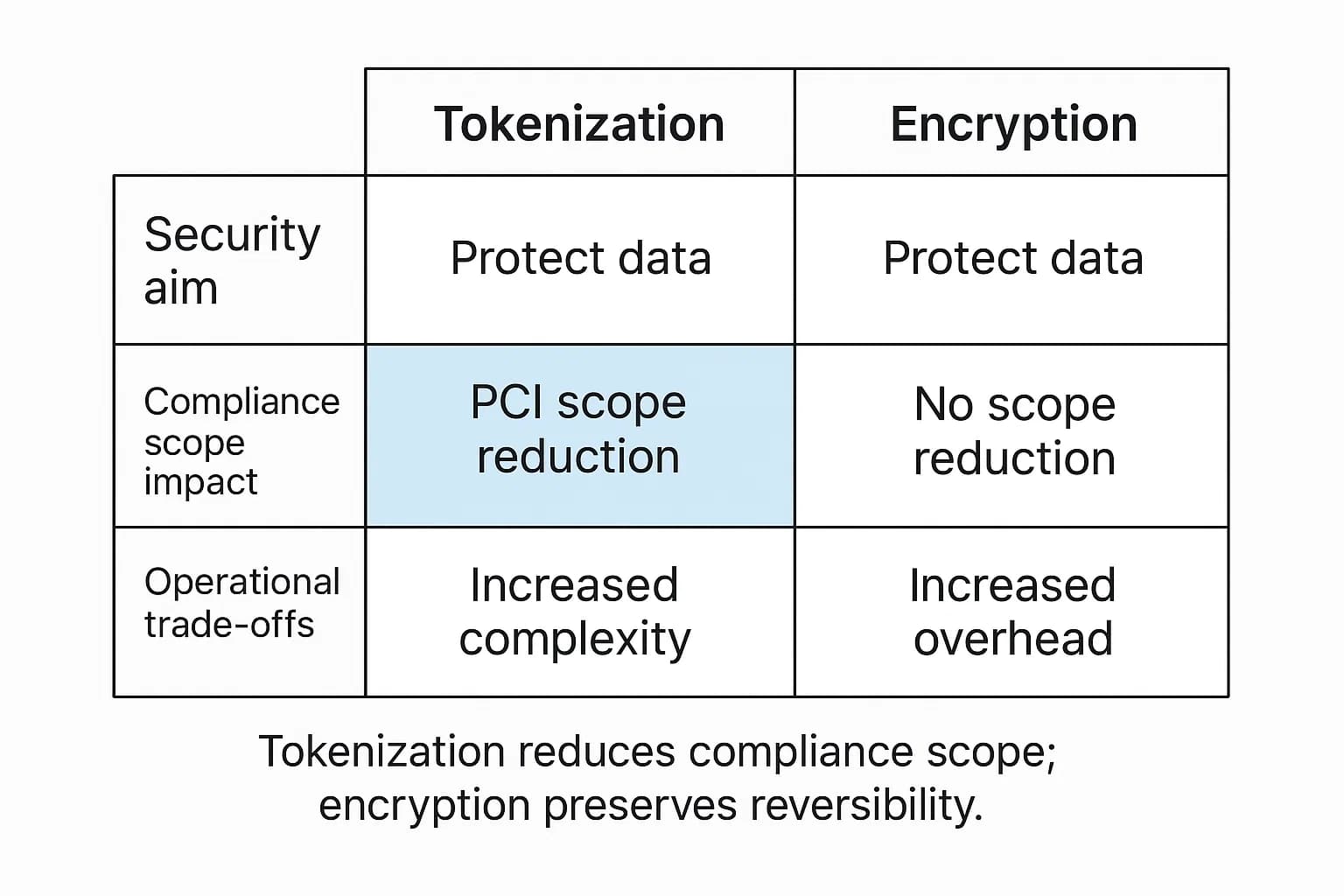

While often grouped together, tokenization and encryption serve very different strategic purposes. Tokenization is a non mathematical process that replaces sensitive data (like a credit card number) with a non sensitive, randomly generated substitute called a "token." The original data is stored securely in a centralized "token vault".

The crucial difference is that there is no mathematical key to reverse the token. The only way to retrieve the original data is to present the token to the system, which looks it up in the vault. This has profound business implications, especially for compliance. While encrypted data is still considered sensitive by bodies like the PCI Security Standards Council, tokenized data is not.

This means tokenization's primary business driver is compliance scope reduction. By tokenizing credit card numbers at the point of sale, a retailer can remove the actual sensitive data from the vast majority of its network and applications. This can reduce the number of systems that fall under the stringent requirements of the PCI DSS 11.3 penetration testing guide from hundreds to just a handful, dramatically lowering audit costs and administrative overhead.

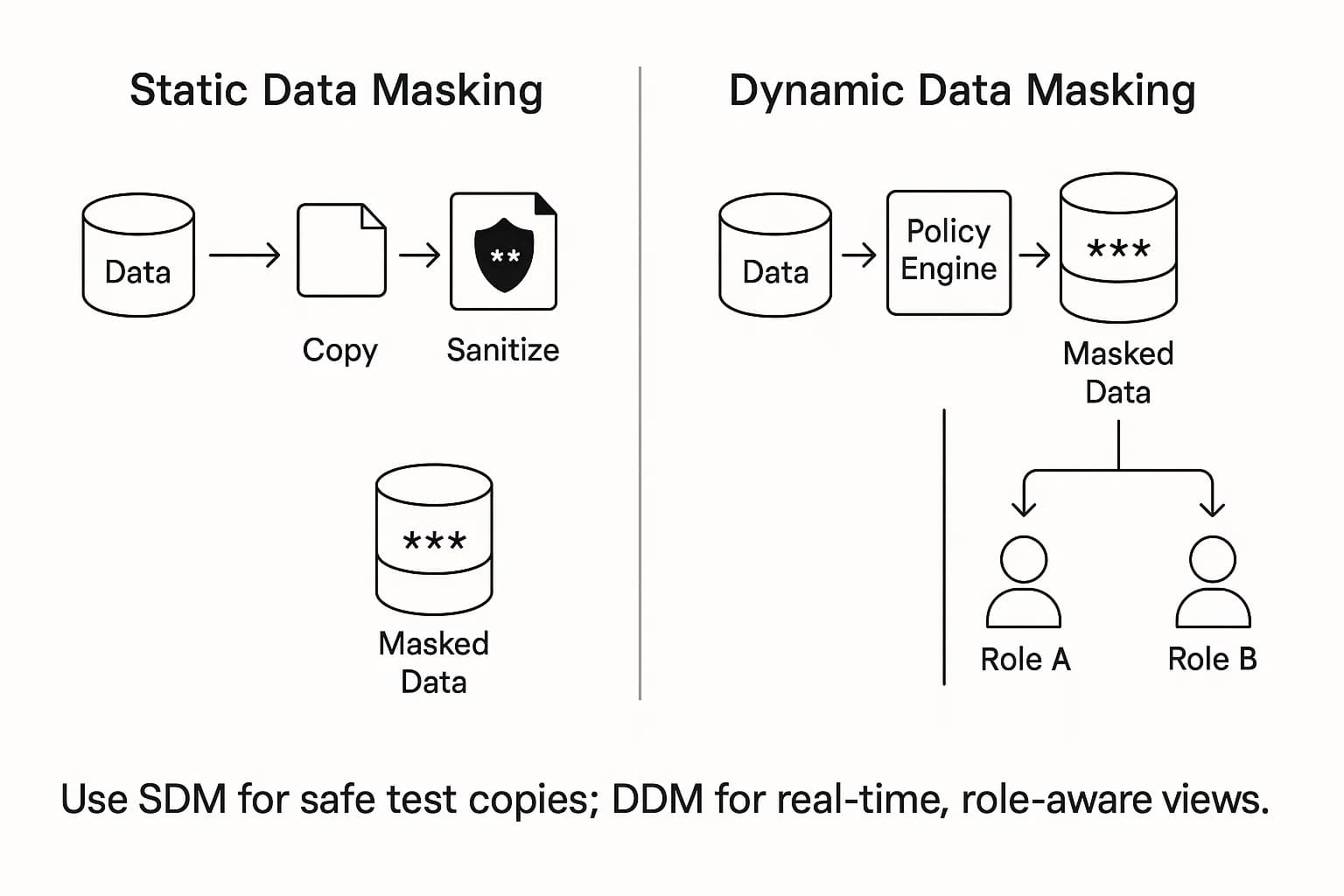

Data masking (or data obfuscation) is the process of creating a structurally similar but inauthentic version of your data. It replaces sensitive information with realistic but fake data, preserving the original format. This allows development, testing, and training teams to work with high fidelity datasets without exposing actual sensitive information, accelerating development cycles securely.

In the modern enterprise, the traditional network perimeter has dissolved. With data and users distributed globally across cloud services and remote locations, a user's digital identity has become the new security perimeter. As a result, Identity and Access Management (IAM) has evolved from an administrative function into the central control plane for data security.

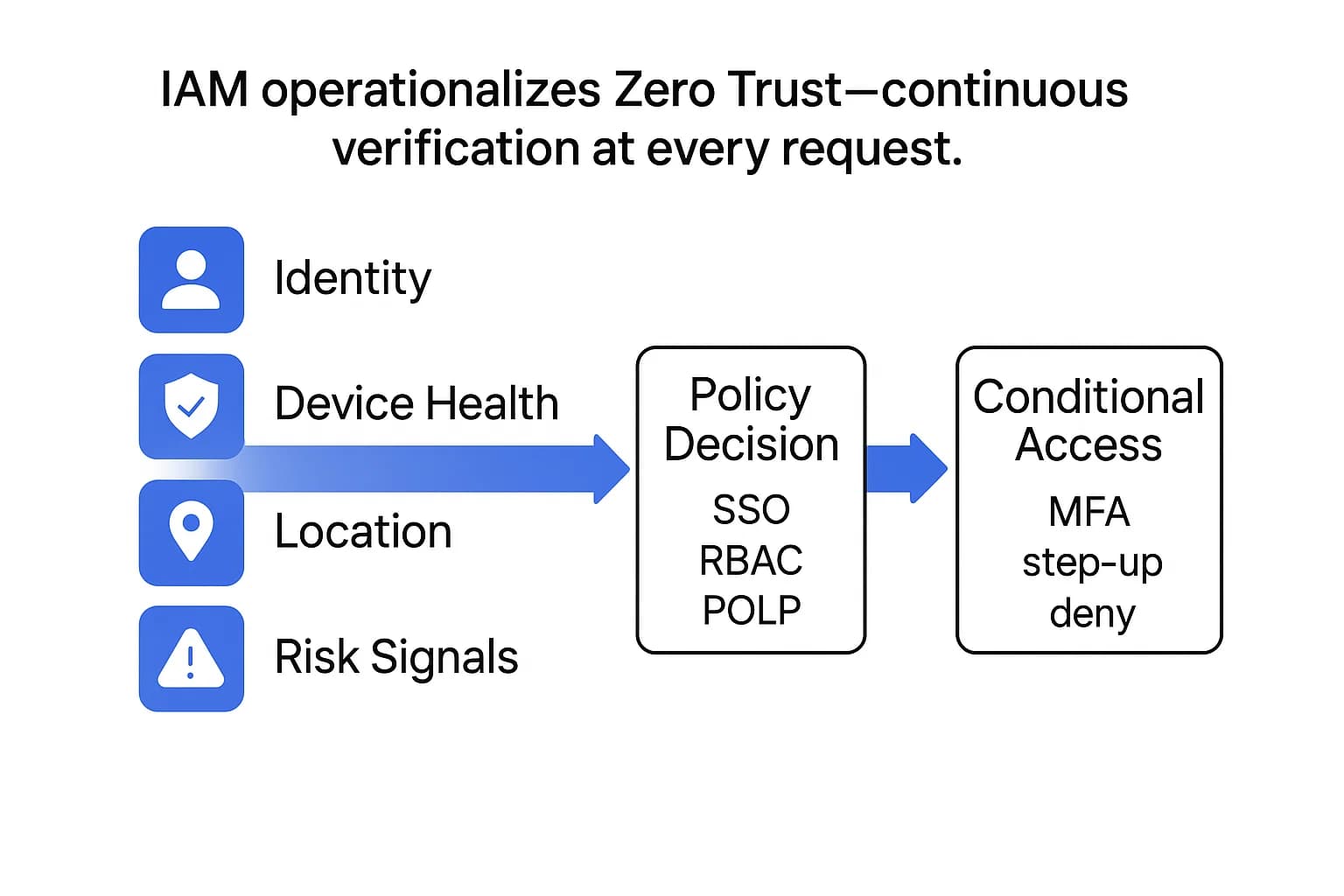

The failure of the "castle and moat" model has given rise to the Zero Trust security model. Its philosophy is simple but powerful: assume no implicit trust, even for users already inside the network. Every single request to access a resource must be authenticated and authorized as if it came from an untrusted network.

Zero Trust is a strategic philosophy, but IAM is the set of tools and processes that makes it operational. Without a mature IAM program, Zero Trust is just a concept on a whiteboard. IAM is what translates the "never trust, always verify" principle into real time, context aware security decisions. It moves security from a single, one time check at the network edge to a model of continuous verification for every interaction, asking not just "Are you on our network?" but "Are you a verified user, on a healthy device, from an expected location, and are you authorized to access this specific data right now?" This elevates IAM from a simple login utility to the primary enforcer of data protection policies.

A mature IAM program manages the entire lifecycle of digital identities and their access rights. Its core functions include:

A critical distinction must be made between authentication and authorization. Think of it this way: Authentication is showing your ID to the bouncer to get into the club. Authorization is the VIP wristband that dictates which specific rooms you're allowed to enter once you're inside. Both are essential for effective access control.

While IAM controls who can access data, Data Loss Prevention (DLP) services focus on what users can do with that data once they have access. DLP's mission is to prevent the unauthorized leakage or exfiltration of sensitive information from an organization's control.

DLP solutions work by using deep content analysis (e.g., using regular expressions to find patterns like credit card or Social Security numbers) and contextual analysis (e.g., monitoring user behavior for anomalies) to identify sensitive data and enforce policies to stop it from being shared or transferred in unsafe ways.

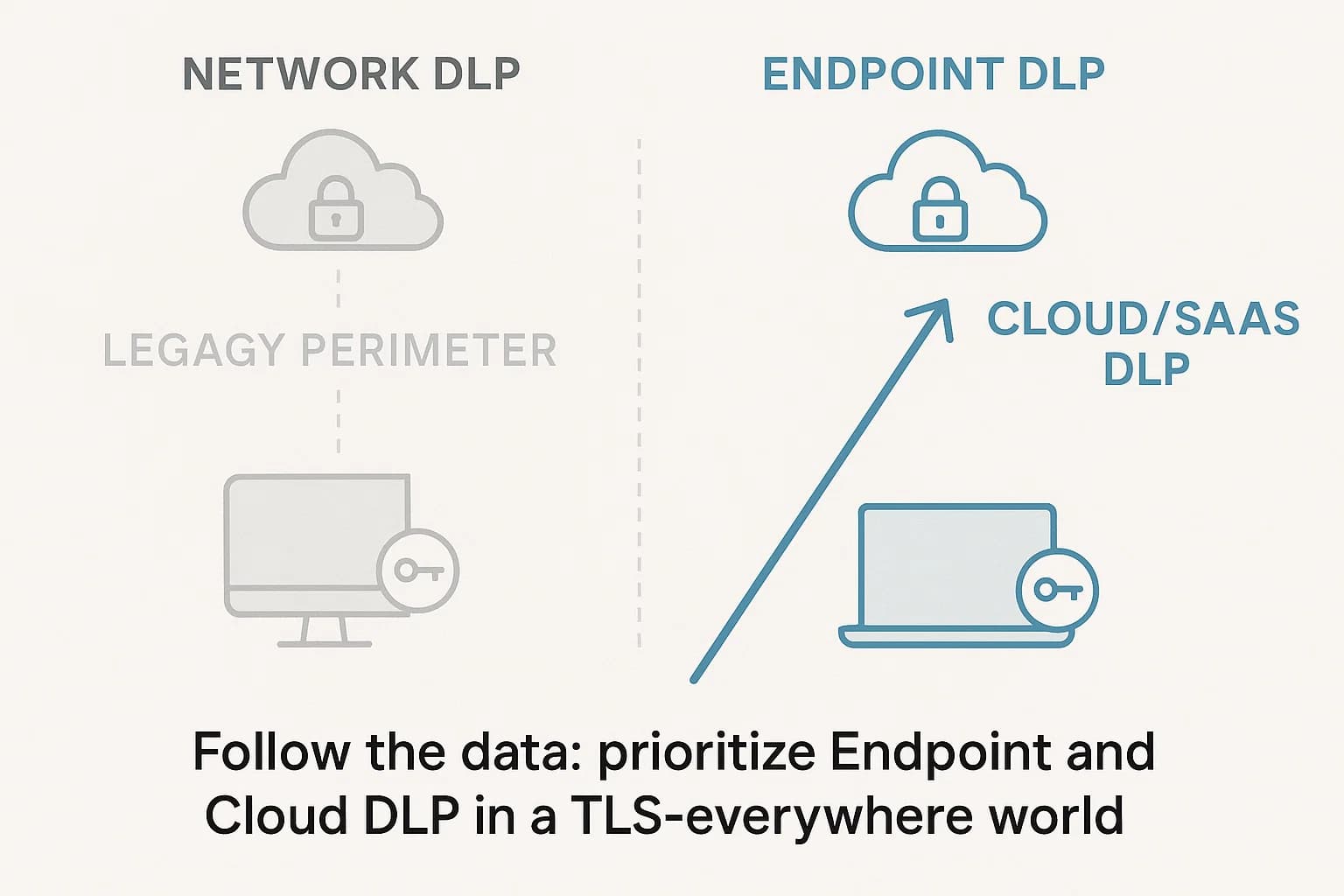

DLP solutions are typically deployed in three models:

For years, Network DLP was considered the primary control. However, this model is now obsolete. The mass migration to cloud applications, the universal adoption of end to end TLS encryption for web traffic, and the shift to a distributed, remote workforce have created massive blind spots for traditional Network DLP. Most corporate data traffic no longer flows through the central corporate network where Network DLP sits; it travels directly from a remote employee's laptop over their home internet to a cloud application.

As a result, a strategic inversion has occurred: Endpoint DLP and Cloud DLP are now the essential, primary forms of data loss prevention. They provide visibility and control where the data now lives and moves: on the user's device and within the cloud applications they use.

This shift is not just a technical update; it demands a fundamental re-architecture of a company's data protection strategy and a corresponding reallocation of the security budget. CISOs who continue to invest heavily in legacy network DLP appliances are funding a tool with diminishing returns while leaving the two most common egress points in the modern enterprise the remote endpoint and the cloud dangerously exposed. The budget and architectural focus must shift to solutions that address this modern reality.

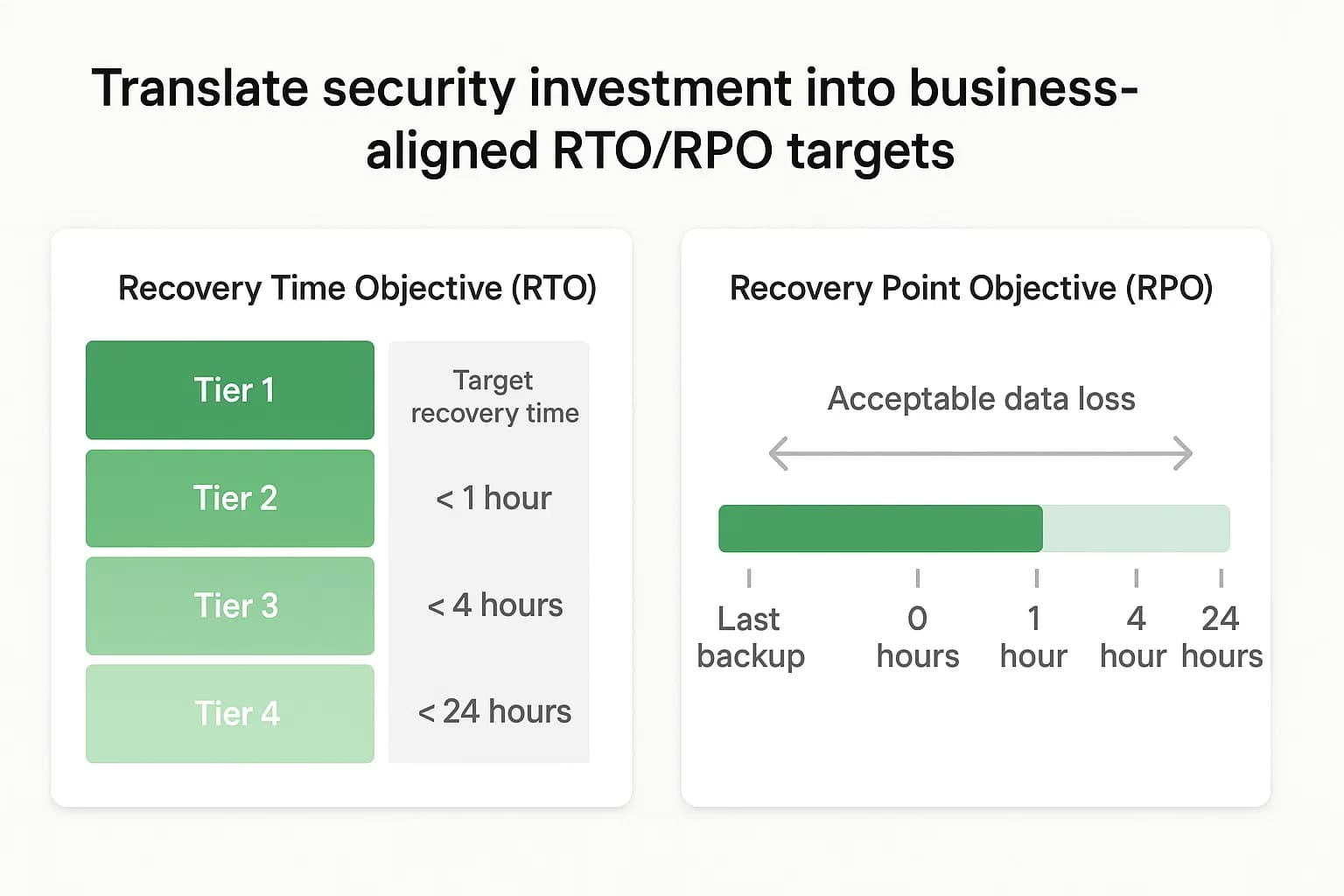

No defense is infallible. A mature security strategy operates under the assumption that a security incident will eventually occur. Therefore, the ability to withstand and recover from a disruptive event is just as critical as the ability to prevent one. This capacity for resilience is the ultimate measure of a security program's effectiveness, as it directly supports the organization's primary goal: business continuity.

By shifting the conversation with executive leadership from abstract threat prevention to tangible business resilience, the CISO can align security investments directly with the preservation of business operations. This is achieved by framing needs in business terms, using metrics like Recovery Time Objectives (RTO) and Recovery Point Objectives (RPO) for critical revenue streams. Instead of asking for a new firewall to stop hackers, a CISO can justify an investment in a data resiliency solution to ensure the RTO of one hour for an e-commerce platform that generates millions per day. This approach reframes the security function's perception from a technical cost center to a strategic business partner vital to the organization's survival.

Data backup and disaster recovery (DR) are the core components of a resilience strategy.

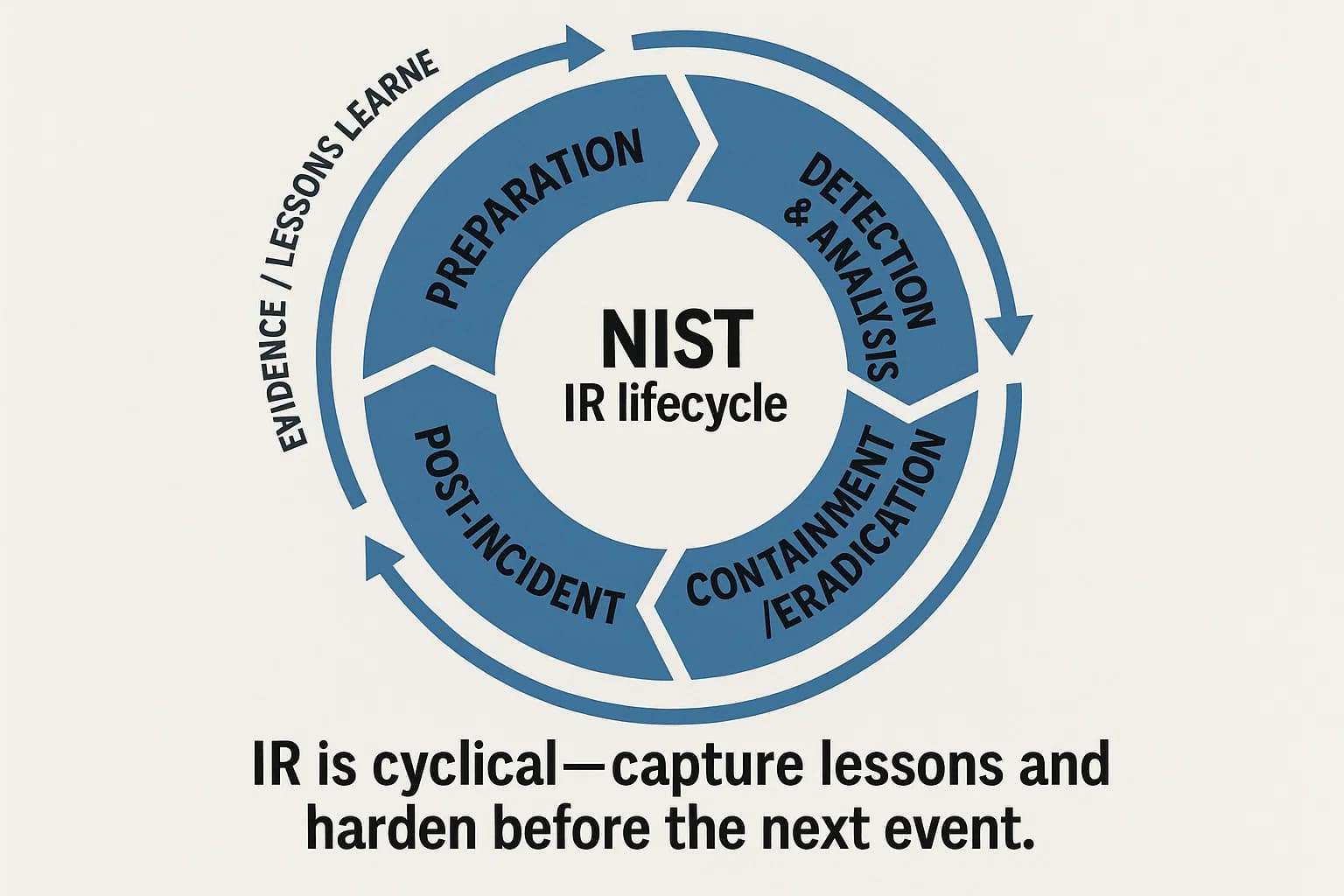

When an incident occurs, a well defined and rehearsed Incident Response (IR) plan is critical to minimizing damage. The NIST framework provides a clear, four phase lifecycle for managing an incident.

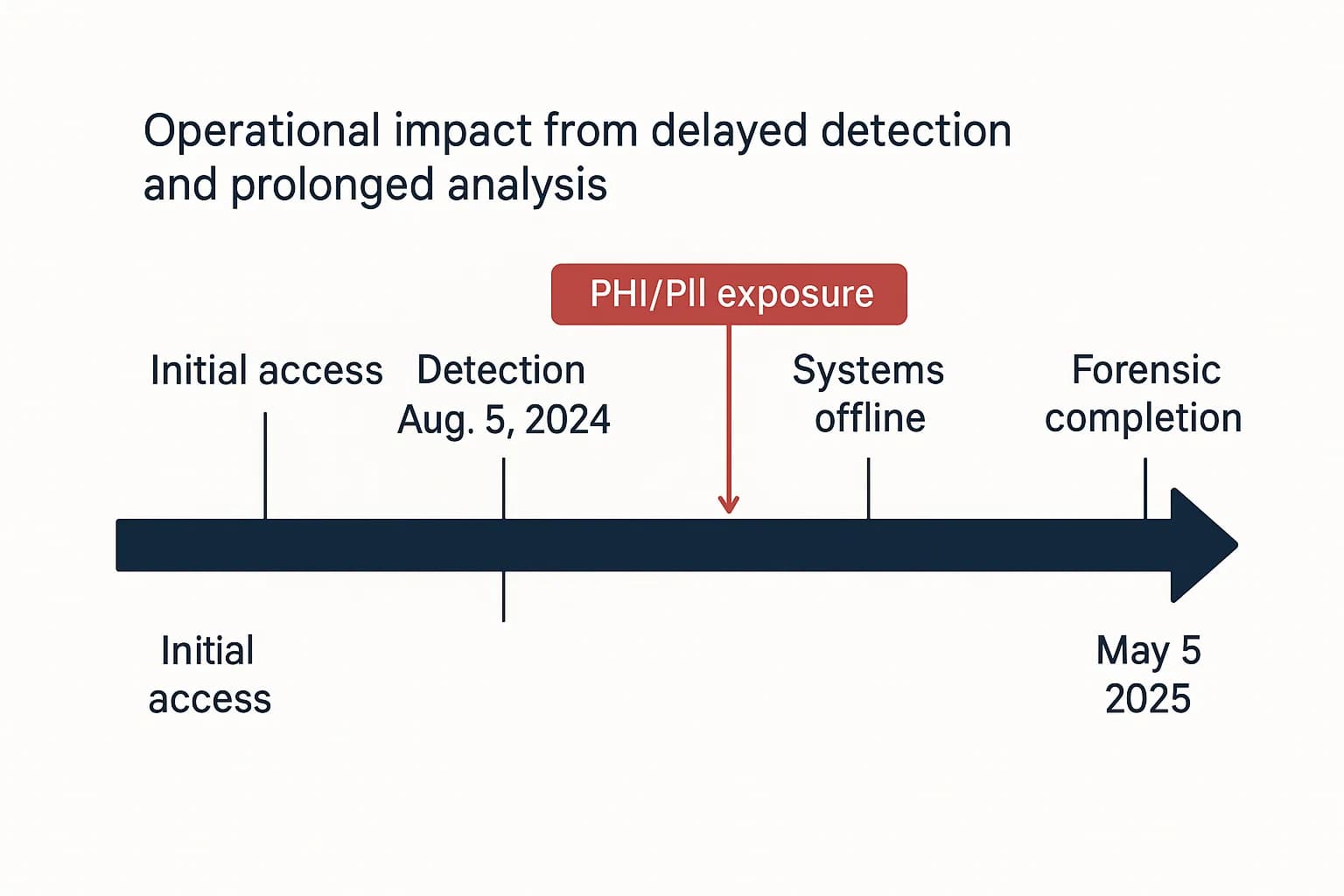

The devastating impact of a resilience failure is powerfully illustrated by the 2024 ransomware attack on McLaren Health Care.

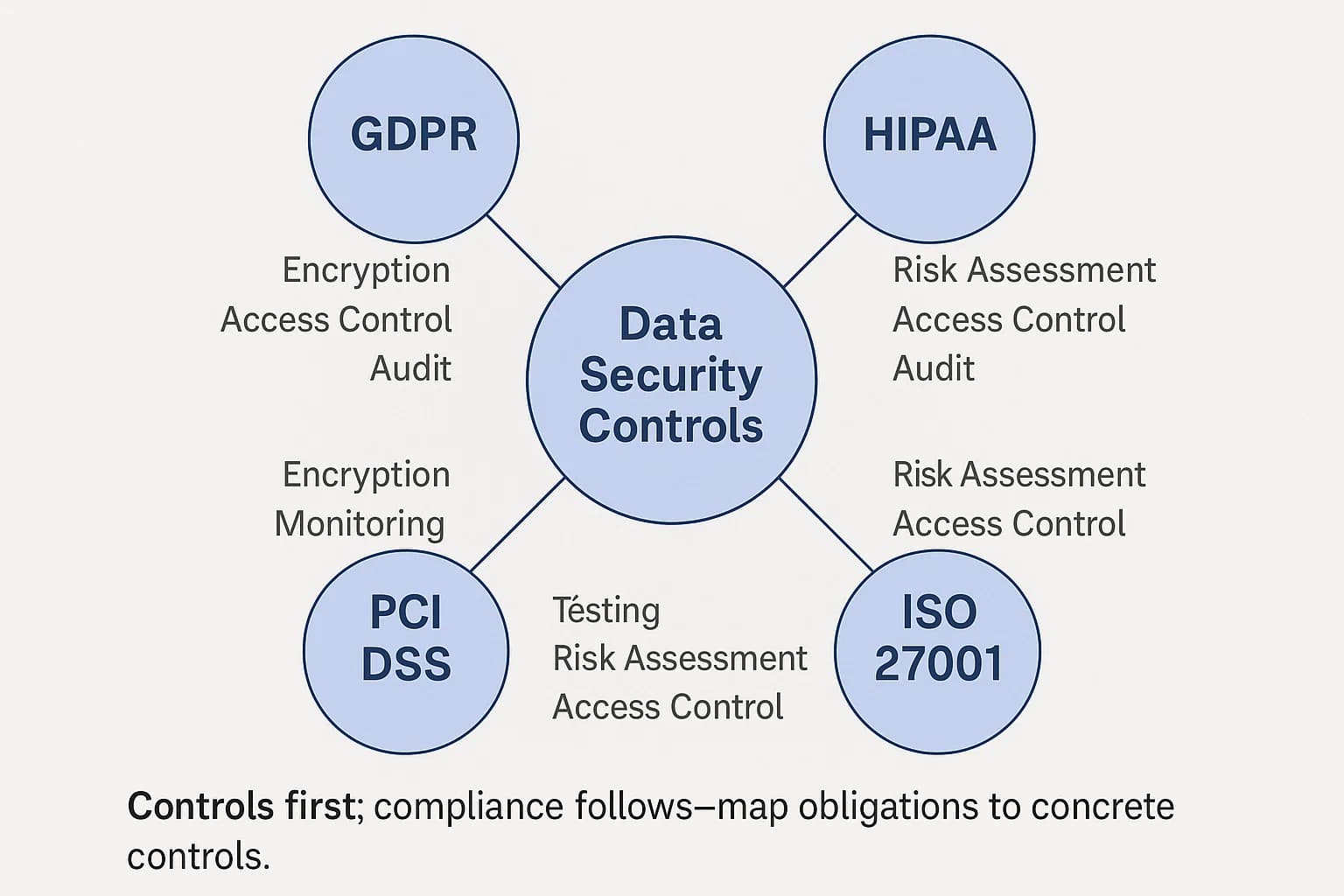

Data security services do not operate in a vacuum. They are governed by a complex web of legal regulations, industry standards, and best practice frameworks. A mature data security program is one that not only implements effective technical controls but also demonstrates auditable compliance with these mandates, treating them not as a burden but as a driver for security maturity.

Beyond specific regulations, many organizations adopt the NIST Cybersecurity Framework (CSF) to guide their security programs. The CSF is not a rigid standard but a flexible, voluntary framework of best practices designed to help organizations manage and reduce cybersecurity risk.

Its true strategic value lies in its ability to serve as a communication tool that bridges the gap between technical practitioners and business leaders. Its structure is organized around six simple, intuitive core functions:

By aligning the security program with these functions, a CISO can move the conversation with leadership away from a technical checklist and toward a strategic discussion about managing business risk. This approach positions compliance as a natural outcome of a robust, risk based security posture, rather than making it the sole objective.

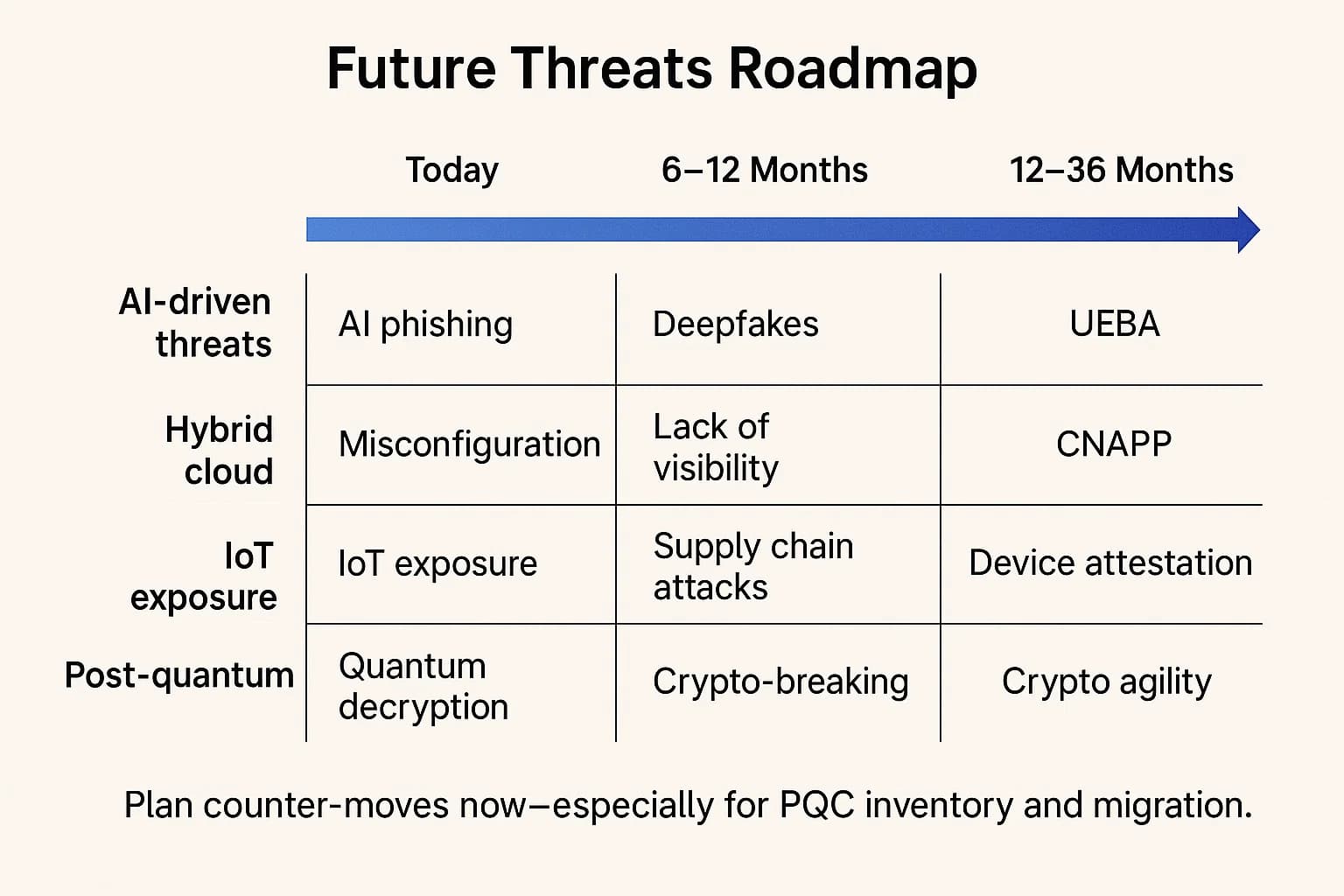

The data security landscape is in a state of perpetual evolution. A forward looking strategy must anticipate and prepare for the disruptions of tomorrow.

Artificial Intelligence (AI) and Machine Learning (ML) are a dual edged sword, simultaneously empowering defenders and arming attackers.

Architectural shifts toward hybrid cloud and the explosive growth of the Internet of Things (IoT) are creating a vastly more complex attack surface to defend.

Perhaps the most profound long term threat is the advent of large scale quantum computers. These machines will be capable of breaking today's most widely used public key encryption algorithms, such as RSA, rendering them obsolete.

This is not a distant problem. It has immediate implications due to the threat of "harvest now, decrypt later" attacks. Adversaries and nation states are likely already intercepting and storing massive volumes of encrypted data today. Their intention is to hold this data until they have access to a quantum computer capable of decrypting it in the future. For any organization with data that must remain confidential for decades such as government secrets, intellectual property, or health records this is a present day danger.

In response, NIST is leading the effort to standardize a new generation of Post Quantum Cryptography (PQC) algorithms. The eventual transition to PQC will be a massive undertaking, requiring a strategic shift toward cryptographic agility and the ability to efficiently migrate to new cryptographic standards. This requires CISOs to begin the multi-year journey of inventorying all cryptographic assets and developing a strategic roadmap for migration, a true test of a mature, forward looking data security program.

1. What is the first step in creating a data security strategy?

The first and most critical step is data discovery and classification. You can't protect what you don't know you have. This involves deploying tools and processes to map where all sensitive data such as PII, intellectual property, and financial records is created, stored, and processed across your entire hybrid IT environment.

2. How do data security services help with compliance?

Data security services provide the essential technical and procedural controls required by regulations like GDPR, HIPAA, and PCI DSS. For example, encryption helps meet confidentiality requirements, IAM enforces mandated access controls, and DLP helps prevent the unauthorized exfiltration of regulated data, all of which are core tenets of a program.

3. What is the difference between a vulnerability assessment and a penetration test?

A is an automated scan that identifies known weaknesses, like checking for unlocked doors and windows in a building. A is a manual, goal oriented exercise where ethical hackers actively try to break in to test whether your defenses actually work under a real world attack scenario.

4. What are the most common causes of data breaches?

According to recent reports from IBM and Verizon, the most common external attack vectors include phishing, the use of stolen credentials, and the exploitation of vulnerabilities in public facing applications. However, a significant portion nearly 60% involve a human element, which includes both malicious and simple, unintentional human error.

5. How does Zero Trust improve data security?

Zero Trust improves security by eliminating the dangerous concept of implicit trust. It operates on a "never trust, always verify" principle, meaning every user and device must be authenticated and authorized for every single resource they try to access. This drastically reduces an attacker's ability to move laterally within a network after an initial compromise.

6. Can data security be fully automated?

While many data security tasks can and should be automated such as threat detection, policy enforcement, and initial incident response a skilled human element remains crucial. People are needed for strategic decision making, complex incident analysis, proactive threat hunting, and understanding the business context behind security events. The most effective approach combines AI driven automation with expert human oversight.

7. What is a cybersecurity risk assessment?

A is a formal process used to identify, analyze, and evaluate risks to your organization's data, systems, and operations. It helps you understand the likelihood and potential impact of various threats, allowing you to prioritize security efforts and make informed, risk based decisions on where to invest your resources.

The threats of 2025 demand more than just awareness; they require readiness. If you're looking to validate your security posture, identify hidden risks, or build a resilient defense strategy, DeepStrike is here to help. Our team of practitioners provides clear, actionable guidance to protect your business.

Explore our penetration testing services for businesses to see how we can uncover vulnerabilities before attackers do. Drop us a line, we’re always ready to dive in.

Mohammed Khalil is a Cybersecurity Architect at DeepStrike, specializing in advanced penetration testing and offensive security operations. With certifications including CISSP, OSCP, and OSWE, he has led numerous red team engagements for Fortune 500 companies, focusing on cloud security, application vulnerabilities, and adversary emulation. His work involves dissecting complex attack chains and developing resilient defense strategies for clients in the finance, healthcare, and technology sectors.

Stay secure with DeepStrike penetration testing services. Reach out for a quote or customized technical proposal today

Contact Us