October 10, 2025

Updated: October 10, 2025

A data-rich 2025 guide to AI-powered attacks, with cases, costs, and controls

Khaled Hassan

AI is changing both defense and offense in security. Attackers now use AI to generate realistic phishing at scale, clone executive voices, probe exposed AI infrastructure, and automate intrusion steps. Defenders use AI to detect anomalies faster, triage alerts, and contain incidents. Yet skills gaps and misconfigured AI stacks open new doors. This guide compiles the most current AI cyber attack statistics 2025, translates numbers into business impact, and gives a prioritized playbook you can execute this quarter. Expect verified sources, fresh examples, and practical controls that match how attacks happen today.

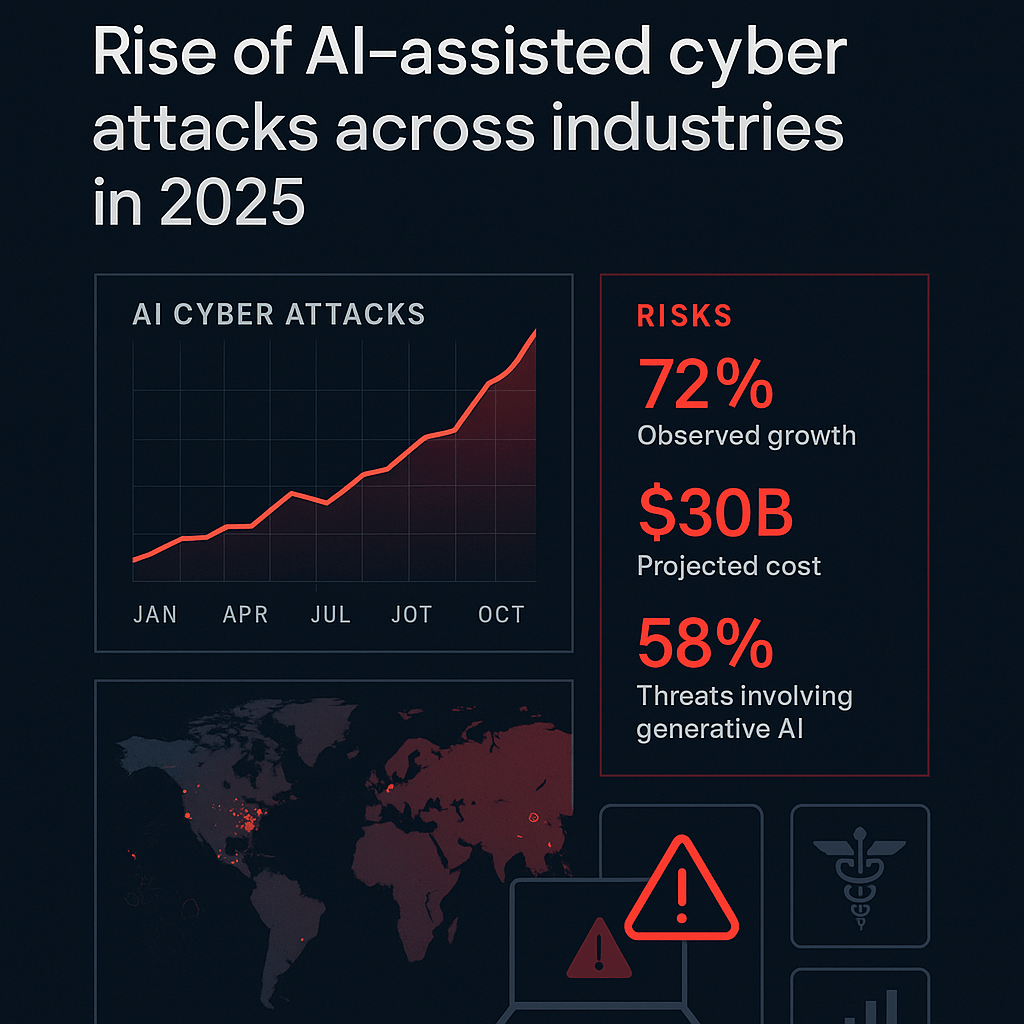

Rise of AI-assisted cyber attacks across industries in 2025, revealing a sharp 72% increase in incidents and $30B in projected global damages.

2025 confirms a saturated threat environment. The Verizon DBIR 2025 shows the largest evidence base yet, with human-focused techniques like phishing and pretexting still dominant. AI raises the quality and quantity of lures. Meanwhile, national and industry reports describe a mix of old and new tactics, with AI making reconnaissance and social engineering cheaper and faster, and with misconfigurations in cloud and AI stacks creating direct paths to sensitive data.

Stats that matter right now

Attackers use AI to create believable emails, texts, and call scripts that match brand voice and personal style. Microsoft’s Cyber Signals shows rapid growth in AI-assisted fraud patterns and describes ecosystem responses across identity, browser, and operating system layers. For defenders, the message is clear. Expect messages that look and sound real, then adjust verification flows accordingly.

Practical impact

The FBI warns about ongoing impersonation of senior officials by text and voice, and about spoofed sites that look identical to official ones. The same tradecraft drives executive fraud and BEC in the private sector. Your teams must assume realistic audio or websites can be fake, and verify through a second trusted channel.

AI stacks often include vector databases, model registries, and inference endpoints. Trend Micro reports over 200 completely unprotected Chroma servers and many more partially protected, with read or write access available without credentials. This enables theft of embeddings and documents, poisoning of indexes, and pivoting into the rest of the environment. Locking down AI components is now basic hygiene.

Press and analyst coverage highlight agentic AI as a near-term challenge. Security leaders advise treating agents like interns with restricted privileges, strong guardrails, and runtime monitoring to prevent objective drift or tool misuse. This governance approach prevents agents from becoming privileged attackers inside your network.

Public sector targets draw persistent attention from capable adversaries. Microsoft reports scale and sophistication against governments, while ENISA notes malware and ransomware disguised as AI tool installers. Critical infrastructure exposure rises when operational technology connects to cloud AI services without strict segmentation and monitoring.

Finance teams face AI-driven BEC, voice-cloned executives, and vendor impersonation. Fraud funnels now blend deepfake calls with spoofed websites that harvest MFA seeds or prompt malicious installs. Controls that require multi-channel approval for any money movement are essential.

Data-rich environments and complex supply chains make these sectors attractive. Misconfigured AI components and sprawling third-party SaaS increase blast radius. Continuous discovery and takedown of exposed assets, plus vendor risk reviews for any LLM or vector DB integration, reduce common failure points.

Fresh 2025 UK survey data shows very high breach exposure for universities and schools, a trend that mirrors broader public sector strain and limited resources for security programs.

The FBI PSA on senior official impersonation describes live text and voice campaigns since April 2025. The lesson for enterprises is to protect finance, HR, and IT helpdesk flows with callback verification to a known number, never to the one in the message.

Trend Micro’s repeat scans in May and July 2025 found hundreds of open Chroma servers plus other AI infrastructure running in containers with weak defaults. Enterprises should treat AI components like any Internet-facing app, add authentication, rate limiting, and logging, and continuously scan for exposure.

A run of public sector and supplier incidents across 2025 shows that classic misconfigurations, not zero days, still drive material events. AI accelerates discovery and exploitation, which is why asset inventory and configuration baselines matter more than ever.

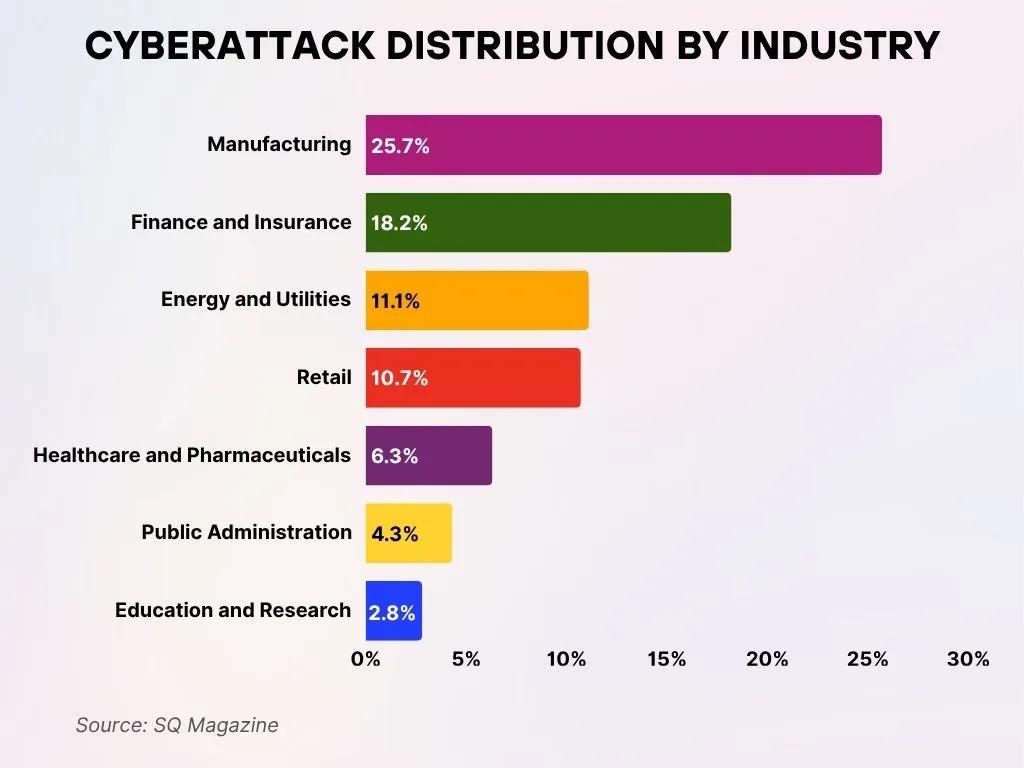

Industries most affected by AI-driven cyber attacks

IBM’s Cost of a Data Breach 2025 emphasizes a key pattern. Organizations using security AI and automation tend to reduce average breach cost compared to those that do not. Third-party analysis of the same dataset underscores the adoption curve, with more enterprises reporting at least limited use of AI in detection and response.

The longer an attacker lingers, the higher the cost for ransom negotiation, data exfiltration, legal exposure, and reputational damage. AI helps by accelerating anomaly detection and first-response actions. Teams that pair AI with rehearsed playbooks lower mean time to detect and contain, which shrinks tail risk.

What percent of organizations report AI is changing their threat exposure in 2025?

Major vendor and agency reports describe AI as a significant factor in both offense and defense, with rapid growth in deception and fraud patterns.

Are AI deepfakes a real driver of business email compromise?

Yes. Alerts in 2025 cover voice and text impersonation with realistic content. Require out-of-band verification for any request that moves money or grants access.

Is exposed AI infrastructure actually common?

Yes. Industry scans show hundreds of unprotected AI data stores and endpoints, often containerized and reachable on the public Internet.

Does AI really lower breach costs for defenders?

Organizations that adopt security AI and automation commonly report lower average breach costs than peers without these controls, according to IBM’s 2025 program material.

What single control should finance teams implement this quarter?

A two-step rule. Phishing-resistant MFA for all finance accounts, plus mandatory callback verification for any payment or bank-detail change.

Where can I find an authoritative annual baseline on breach trends?

Start with the Verizon DBIR 2025 for incident patterns, then cross-check with IBM’s cost study and recent ENISA alerts for sector nuance

2025 confirms that AI changes how attacks start, spread, and get paid. The numbers show high breach volume, realistic impersonation, and a growing problem of exposed AI infrastructure. The good news, organizations that pair security AI with strong identity, locked-down AI components, and rehearsed playbooks reduce cost and shorten dwell time. Use the data here to prioritize the next three moves. Remove public exposure for AI services. Require phishing-resistant MFA and callback verification. Apply AI in detection where it has the highest return, and validate with tests that mirror how attacks work today.

Stay secure with DeepStrike penetration testing services. Reach out for a quote or customized technical proposal today

Contact Us